Dense feature pyramid network for cartoon dog parsing

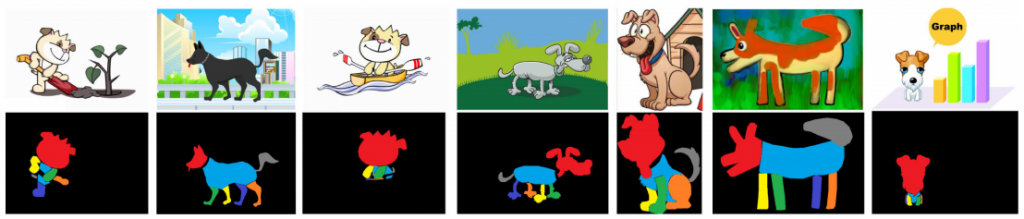

While traditional cartoon character drawings are simple for humans to create, it remains a highly challenging task for machines to interpret. Parsing is a way to alleviate the issue with fine-grained semantic segmentation of images. Although well studied on naturalistic images, research toward cartoon parsing is very sparse. Due to the lack of available dataset and the diversity of artwork styles, the difficulty of the cartoon character parsing task is greater than the well-known human parsing task. In this paper, we study one type of cartoon instance: cartoon dogs. We introduce a novel dataset toward cartoon dog parsing and create a new deep convolutional neural network (DCNN) to tackle the problem. Our dataset contains 965 precisely annotated cartoon dog images with seven semantic part labels. Our new model, called dense feature pyramid network (DFPnet), makes use of recent popular techniques on semantic segmentation to efficiently handle cartoon dog parsing. We achieve a mIoU of 68.39%, a Mean Accuracy of 79.4% and a Pixel Accuracy of 93.5% on our cartoon dog validation set. Our method outperforms state-of-the-art models of similar tasks trained on our dataset: CE2P for single human parsing and Mask R-CNN for instance segmentation. We hope this work can be used as a starting point for future research toward digital artwork understanding with DCNN. Our DFPnet and dataset will be publicly available.

Publication: Wan J, Mougeot G, Yang X. Dense feature pyramid network for cartoon dog parsing[J]. The Visual Computer, 2020: 1-13.

Preprint PDF: dfpn.pdf

Citation:

@article{wan2020dense,

title={Dense feature pyramid network for cartoon dog parsing},

author={Wan, Jerome and Mougeot, Guillaume and Yang, Xubo},

journal={The Visual Computer},

pages={1–13},

year={2020},

publisher={Springer}

}