Facial expressions recognition based on convolutional neural networks for mobile virtual reality

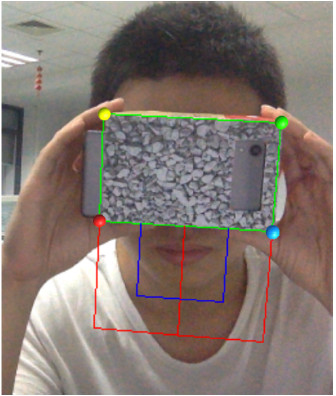

We present a new system designed for enabling direct face-to-face interaction for users wearing a head-mounted displays (HMD) in virtual reality environment. Due to HMD’s occlusion of a user’s face, VR applications and games are mainly designed for single user. Even in some multi-player games, players can only communicate with each other using audio input devices or controllers. To address this problem, we develop a novel system that allows users to interact with each other using facial expressions in real-time. Our system consists of two major components: an automatic tracking and segmenting face processing component and a facial expressions recognizing component based on convolutional neural networks (CNN). First, our system tracks a specific marker on the front surface of the HMD and then uses the extracted spatial data to estimate face positions and rotations for mouth segmentation. At last, with the help of an adaptive approach for histogram based mouth segmentation [Panning et al. 2009], our system passes the processed lips pixels’ information to CNN and get the facial expressions results in real-time. The results of our experiments show that our system can effectively recognize the basic expressions of users.

Publication: Teng Teng, and Xubo Yang. “Facial expressions recognition based on convolutional neural networks for mobile virtual reality.” In Proceedings of the 15th ACM SIGGRAPH Conference on Virtual-Reality Continuum and Its Applications in Industry-Volume 1, pp. 475-478. 2016.

Preprint PDF: Facial expressions recognition based on convolutional neural networks for mobile virtual reality.pdf

Citation:

@inproceedings{teng2016facial,

title={Facial expressions recognition based on convolutional neural networks for mobile virtual reality},

author={Teng, Teng and Yang, Xubo},

booktitle={Proceedings of the 15th ACM SIGGRAPH Conference on Virtual-Reality Continuum and Its Applications in Industry-Volume 1},

pages={475–478},

year={2016}

}