implemented a simple encoder-transformer-decoder net

Showing

- data/data_gaze_fovea_seq.json 0 additions, 3618 deletionsdata/data_gaze_fovea_seq.json

- data/dino3x3/input_Cam000.png 0 additions, 0 deletionsdata/dino3x3/input_Cam000.png

- data/dino3x3/input_Cam004.png 0 additions, 0 deletionsdata/dino3x3/input_Cam004.png

- data/dino3x3/input_Cam008.png 0 additions, 0 deletionsdata/dino3x3/input_Cam008.png

- data/dino3x3/input_Cam036.png 0 additions, 0 deletionsdata/dino3x3/input_Cam036.png

- data/dino3x3/input_Cam040.png 0 additions, 0 deletionsdata/dino3x3/input_Cam040.png

- data/dino3x3/input_Cam044.png 0 additions, 0 deletionsdata/dino3x3/input_Cam044.png

- data/dino3x3/input_Cam072.png 0 additions, 0 deletionsdata/dino3x3/input_Cam072.png

- data/dino3x3/input_Cam076.png 0 additions, 0 deletionsdata/dino3x3/input_Cam076.png

- data/dino3x3/input_Cam080.png 0 additions, 0 deletionsdata/dino3x3/input_Cam080.png

- data/lf_syn.py 99 additions, 0 deletionsdata/lf_syn.py

- data/other.py 341 additions, 0 deletionsdata/other.py

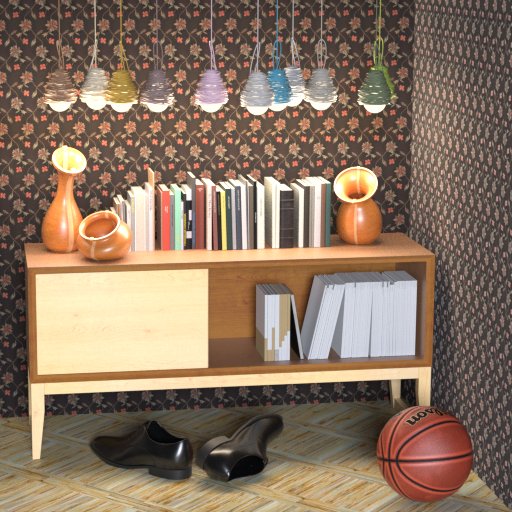

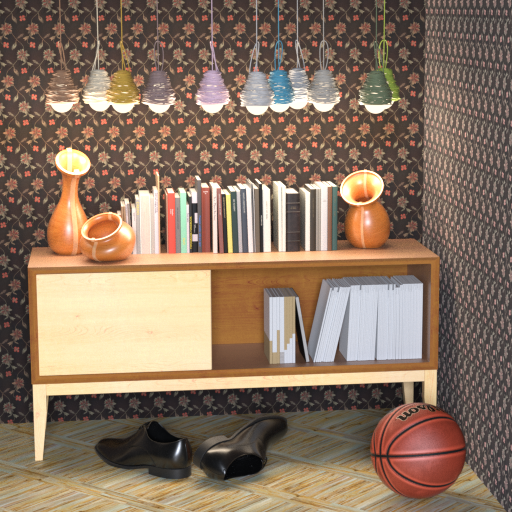

- data/sideboard3x3/input_Cam000.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam000.png

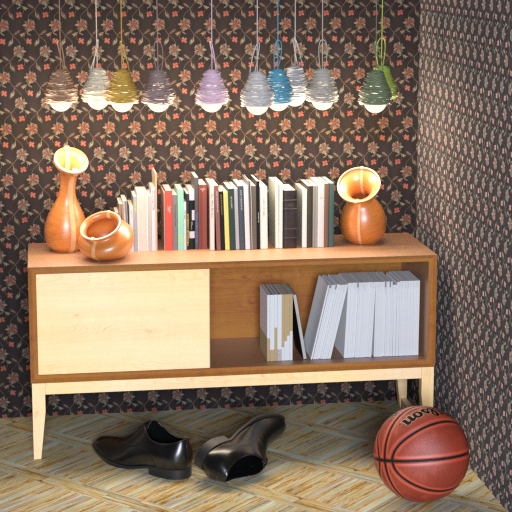

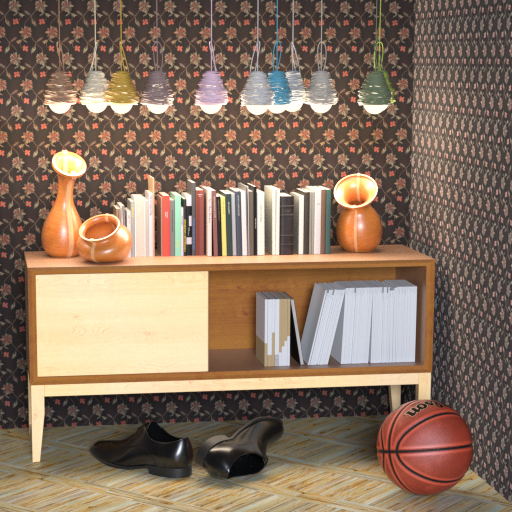

- data/sideboard3x3/input_Cam004.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam004.png

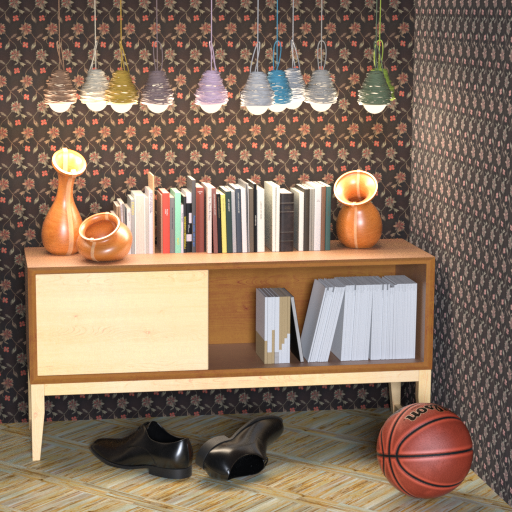

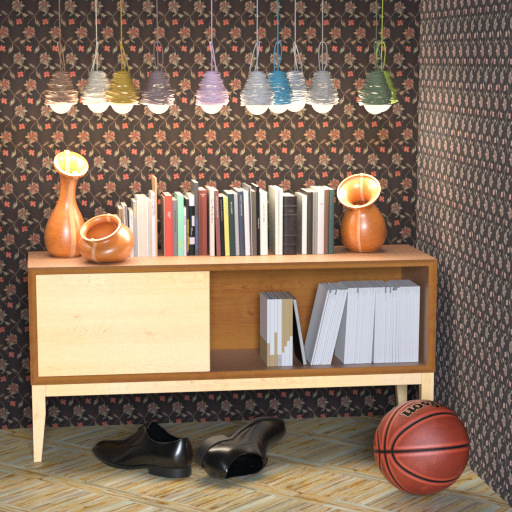

- data/sideboard3x3/input_Cam008.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam008.png

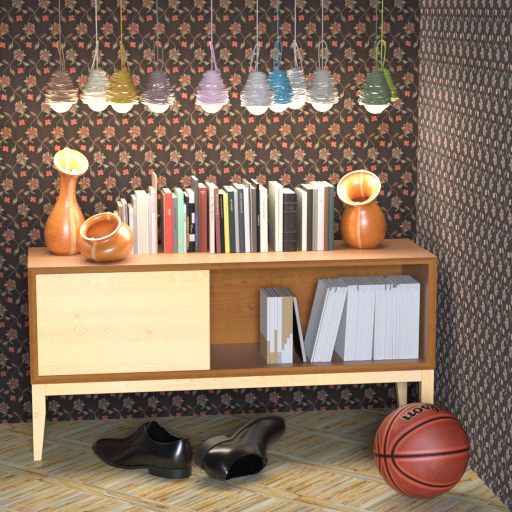

- data/sideboard3x3/input_Cam036.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam036.png

- data/sideboard3x3/input_Cam040.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam040.png

- data/sideboard3x3/input_Cam044.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam044.png

- data/sideboard3x3/input_Cam072.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam072.png

- data/sideboard3x3/input_Cam076.png 0 additions, 0 deletionsdata/sideboard3x3/input_Cam076.png

data/data_gaze_fovea_seq.json

deleted

100644 → 0

This diff is collapsed.

data/dino3x3/input_Cam000.png

deleted

100644 → 0

339 KiB

data/dino3x3/input_Cam004.png

deleted

100644 → 0

338 KiB

data/dino3x3/input_Cam008.png

deleted

100644 → 0

338 KiB

data/dino3x3/input_Cam036.png

deleted

100644 → 0

335 KiB

data/dino3x3/input_Cam040.png

deleted

100644 → 0

335 KiB

data/dino3x3/input_Cam044.png

deleted

100644 → 0

335 KiB

data/dino3x3/input_Cam072.png

deleted

100644 → 0

332 KiB

data/dino3x3/input_Cam076.png

deleted

100644 → 0

331 KiB

data/dino3x3/input_Cam080.png

deleted

100644 → 0

331 KiB

data/lf_syn.py

0 → 100644

data/other.py

0 → 100644

data/sideboard3x3/input_Cam000.png

deleted

100644 → 0

480 KiB

data/sideboard3x3/input_Cam004.png

deleted

100644 → 0

481 KiB

data/sideboard3x3/input_Cam008.png

deleted

100644 → 0

480 KiB

data/sideboard3x3/input_Cam036.png

deleted

100644 → 0

478 KiB

data/sideboard3x3/input_Cam040.png

deleted

100644 → 0

478 KiB

data/sideboard3x3/input_Cam044.png

deleted

100644 → 0

478 KiB

data/sideboard3x3/input_Cam072.png

deleted

100644 → 0

475 KiB

data/sideboard3x3/input_Cam076.png

deleted

100644 → 0

476 KiB