DeepFocus Baseline

Showing

- data/try/_retinal_1.50.png 0 additions, 0 deletionsdata/try/_retinal_1.50.png

- data/try/_retinal_1.75.png 0 additions, 0 deletionsdata/try/_retinal_1.75.png

- data/try/_retinal_2.00.png 0 additions, 0 deletionsdata/try/_retinal_2.00.png

- gen_image.py 105 additions, 0 deletionsgen_image.py

- main.py 309 additions, 0 deletionsmain.py

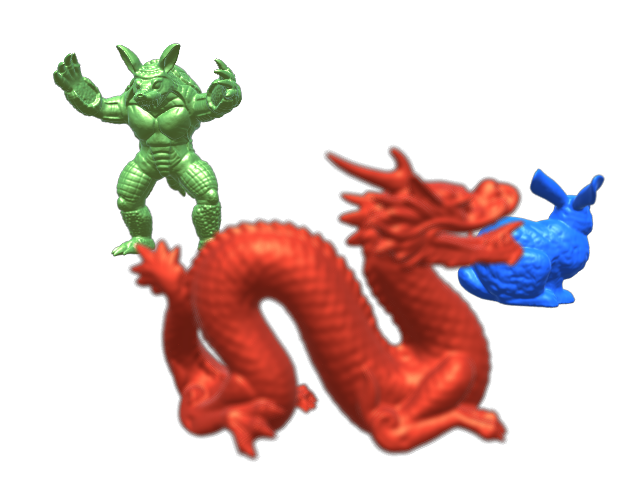

data/try/_retinal_1.50.png

0 → 100644

228 KiB

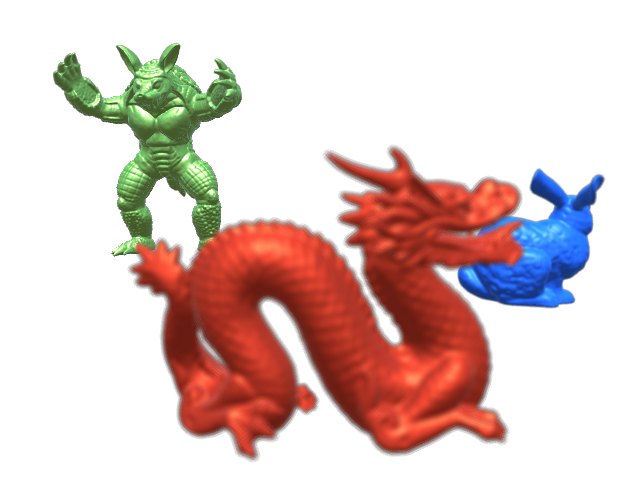

data/try/_retinal_1.75.png

0 → 100644

232 KiB

data/try/_retinal_2.00.png

0 → 100644

233 KiB

gen_image.py

0 → 100644

main.py

0 → 100644